Adversarial Attack in AI Pathology

Trick a imaging diagnostic AI to output whatever you want.

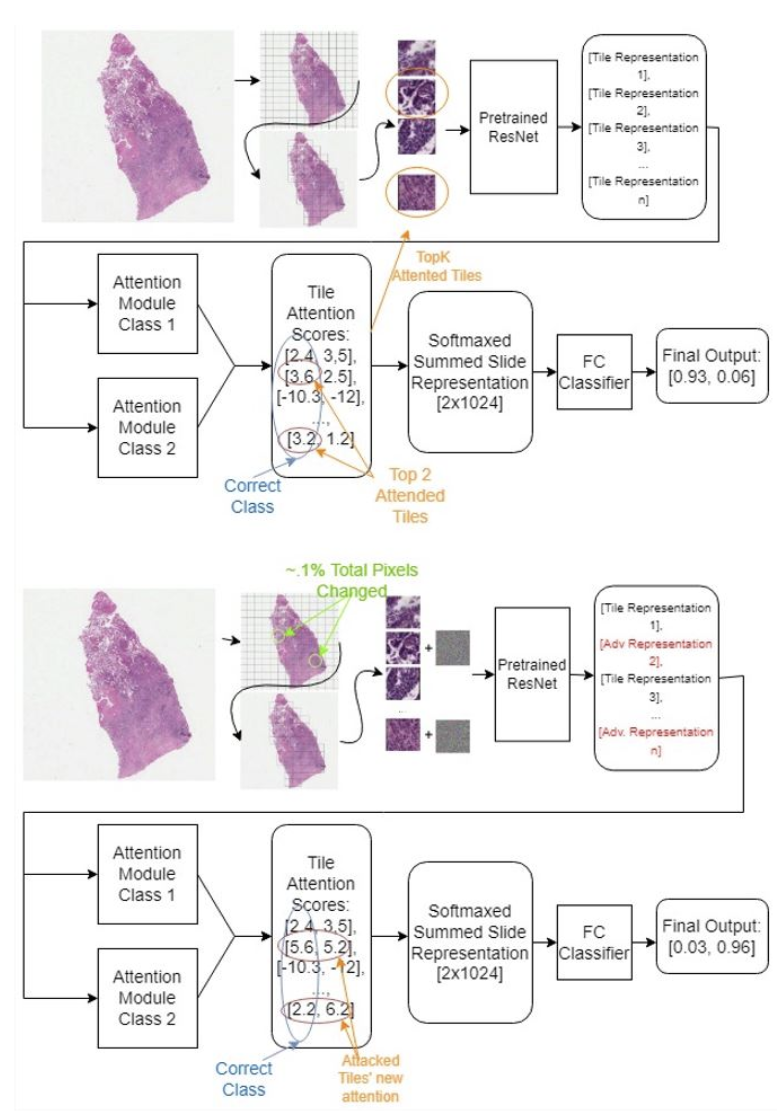

Attention based weakly-supervised learning is a premier method for classifying whole-slide imaging. I explored some adversarial attack strategies againest such kind of histopathology AI. I discovered that by leveraging the attention mechanism, attacking the top-few important tiles is sufficient at massively damaging model accuracy. If drug seeking behavior is a common abuse againest human doctors, will there exist economic incentive to preform adversarial attacks to trick AI-based diagnosis pipelines in the future?

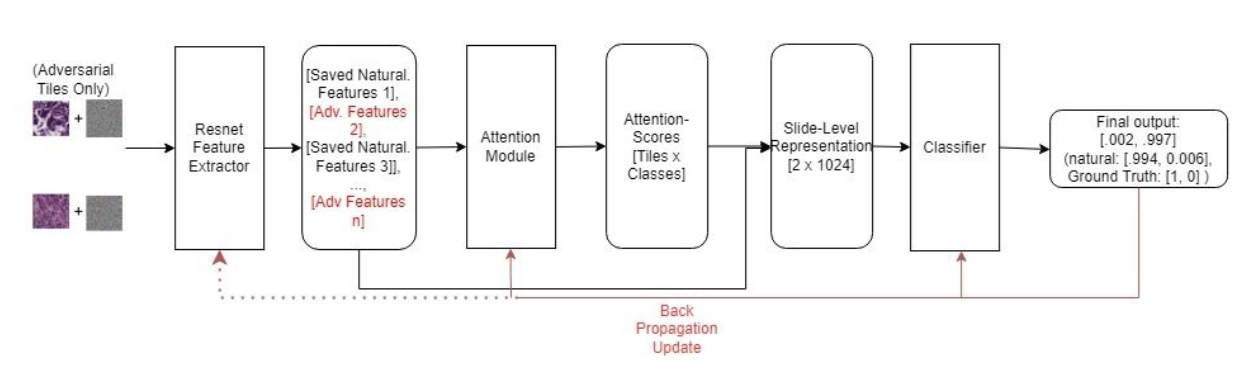

I also propose computationally-cheap methods for training againest such an attack.

Paper/poster presented at ICML BioComp Workshop